Epistemic status: This post is a synthesis of ideas that are, in my experience, widespread among researchers at frontier labs and in mechanistic interpretability, but rarely written down comprehensively in one place - different communities tend to know different pieces of evidence. The core hypothesis - that deep learning is performing something like tractable program synthesis - is not original to me (even to me, the ideas are ~3 years old), and I suspect it has been arrived at independently many times. (See the appendix on related work).

This is also far from finished research - more a snapshot of a hypothesis that seems increasingly hard to avoid, and a case for why formalization is worth pursuing. I discuss the key barriers and how tools like singular learning theory might address them towards the end of the post.

Thanks to Dan Murfet, Jesse Hoogland, Max Hennick, and Rumi Salazar for feedback on this post.

Sam Altman: Why does unsupervised learning work?

Dan Selsam: Compression. So, the ideal intelligence [...]

---

Outline:

(02:31) Background

(09:06) Looking inside

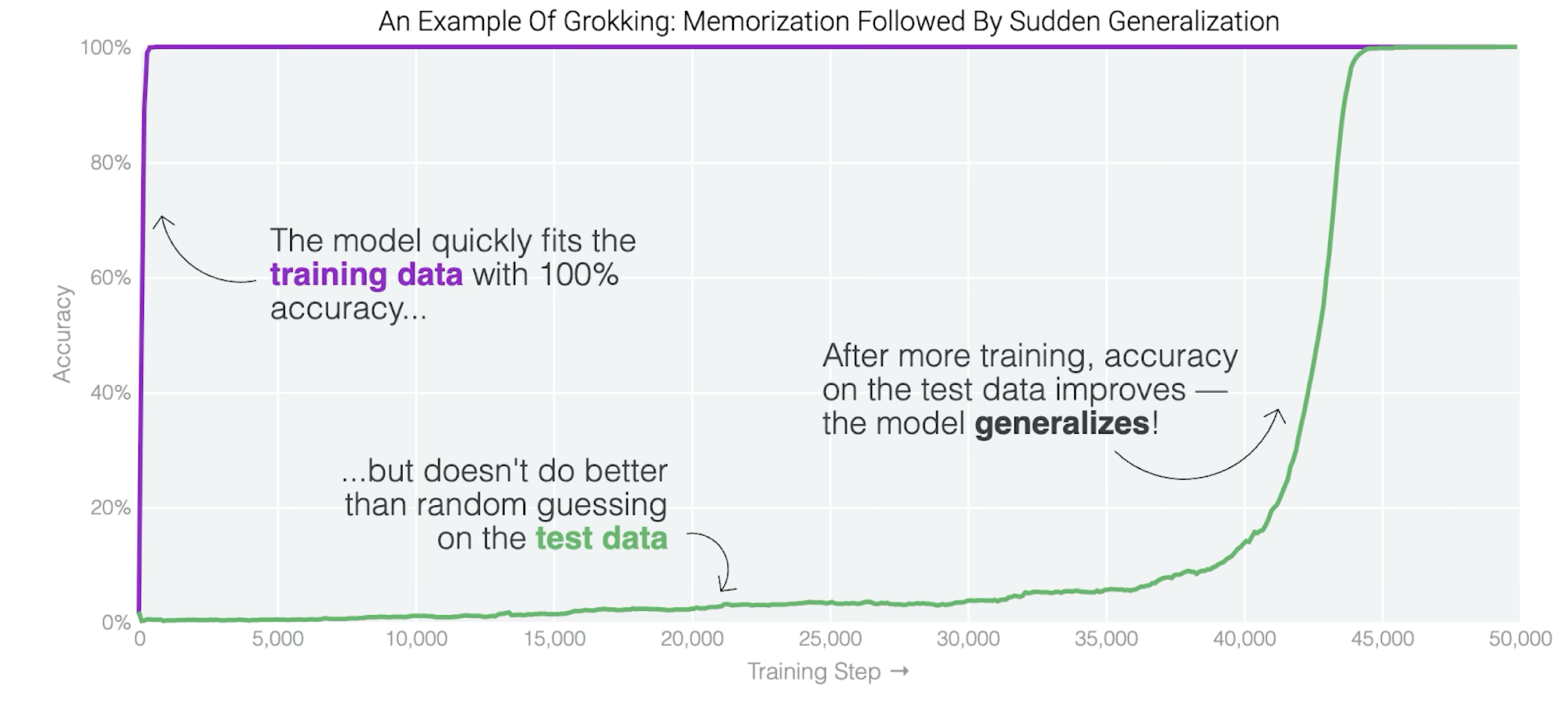

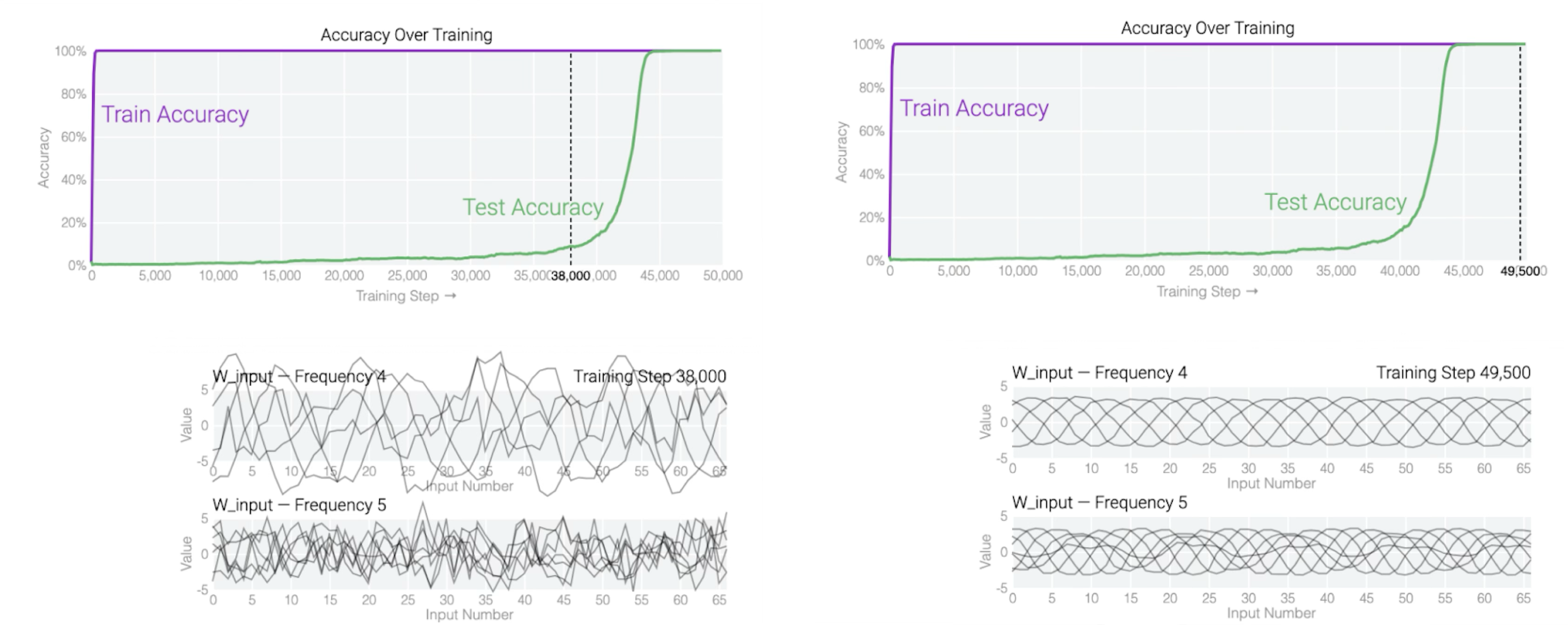

(09:09) Grokking

(16:04) Vision circuits

(22:37) The hypothesis

(26:04) Why this isnt enough

(27:22) Indirect evidence

(32:44) The paradox of approximation

(38:34) The paradox of generalization

(45:44) The paradox of convergence

(51:46) The path forward

(53:20) The representation problem

(58:38) The search problem

(01:07:20) Appendix

(01:07:23) Related work

The original text contained 14 footnotes which were omitted from this narration.

---

First published:

January 20th, 2026

Source:

https://www.lesswrong.com/posts/Dw8mskAvBX37MxvXo/deep-learning-as-program-synthesis-1

---

Narrated by TYPE III AUDIO.

---

Popular Podcasts

NFL Daily with Gregg Rosenthal

Gregg Rosenthal and a rotating crew of elite NFL Media co-hosts, including Patrick Claybon, Colleen Wolfe, Steve Wyche, Nick Shook and Jourdan Rodrigue of The Athletic get you caught up daily on all the NFL news and analysis you need to be smarter and funnier than your friends.

Dateline NBC

Current and classic episodes, featuring compelling true-crime mysteries, powerful documentaries and in-depth investigations. Follow now to get the latest episodes of Dateline NBC completely free, or subscribe to Dateline Premium for ad-free listening and exclusive bonus content: DatelinePremium.com

Stuff You Should Know

If you've ever wanted to know about champagne, satanism, the Stonewall Uprising, chaos theory, LSD, El Nino, true crime and Rosa Parks, then look no further. Josh and Chuck have you covered.